Deploying to Cosmonic Control

Cosmonic Control separates operator and developer concerns: Wasm component developers adopt their own tooling to create Wasm binaries, while operators use standard cloud-native pipelines and tooling to manage deployments.

In this section for operators, you'll learn...

- What are Cosmonic Control deployment manifests?

- How to manage ingress with Envoy

- How to deploy WebAssembly workloads with ingress

What are Cosmonic Control deployment manifests?

Deploying a workload to Cosmonic Control is the same as deploying any other resource to Kubernetes, using declarative Custom Resource Definition (CRD) manifests written in YAML.

Workload manifests can be deployed manually with kubectl and used in pipelines with tools like Argo CD. Manifests are composed according to the runtime.wasmcloud.dev/v1alpha1 API.

How to manage ingress with Envoy

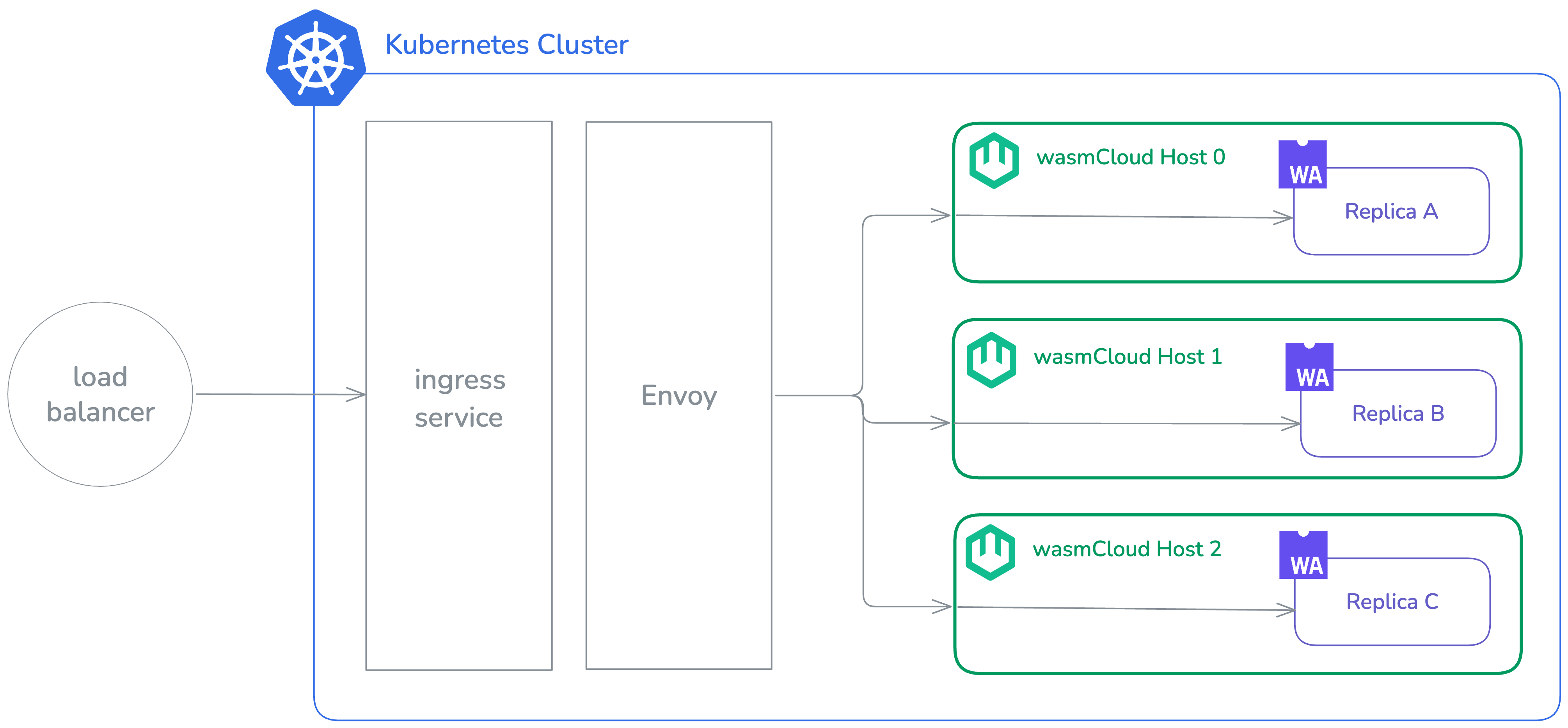

Cosmonic Control uses Envoy to expose WebAssembly workloads externally.

In a Cosmonic Control production deployment, Envoy is deployed and configured alongside Control. The example excerpt below configures Envoy for use with a network load balancer on AWS, as part of the ArgoCD Application manifest for Cosmonic Control.

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: cosmonic-control

namespace: argocd

spec:

project: default

source:

...

helm:

values: |

...

envoy:

service:

type: LoadBalancer

annotations:

service.beta.kubernetes.io/aws-load-balancer-type: nlb

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

destination:

name: in-cluster

namespace: cosmonic-system

...With this configuration, an ingress service is available with an external IP address. The load balancer connects to Envoy pods, which in turn pass requests to a given WebAssembly component deployed with Cosmonic Control. If the component is running on multiple hosts, the requests are load balanced (round robin) against those hosts. If one of those hosts crashes, the component continues to serve but the crashed host is removed from the load balancer rotation.

Configuring Envoy as a proxy with TLS

Envoy expects to handle HTTP, and may be fronted by another solution handling TLS termination, such as Cloudflare.

You can configure your solution to act as a proxy so that your public address (against which users will make requests) points to your ingress address. When your solution receives the request, it can process the request and forward it to the ingress in HTTP plain, regardless of the protocol initiated by the user. A solution like Cloudflare will use SSL certificates managed by the service, so operators don't have to store certificates in the Kubernetes cluster or worry about their renewal.

With this approach, you can use HTTPS (for example) against an address bound to a component, and your service will complete the TLS termination that enables it.

How to deploy WebAssembly workloads with ingress

HTTP-driven WebAssembly workloads are deployed using the HTTPTrigger CRD, which requires two fields for each component:

name: Name of the componentimage: OCI address for the component image

You will also need to specify the correct host for your ingress, and any interfaces that the component will use in addition to wasi:http. In practice, these fields are usually supplied as a values file for the [HTTP Trigger Helm chart]https://github.com/cosmonic-labs/control-demos/tree/main/charts/http-trigger). A typical values file might look like this:

components:

- name: blobby

image: ghcr.io/cosmonic-labs/components/blobby:0.2.0

ingress:

host: 'blobby.localhost.cosmonic.sh'

hostInterfaces:

- namespace: wasi

package: blobstore

version: 0.2.0-draft

interfaces:

- blobstore

- namespace: wasi

package: logging

version: 0.1.0-draft

interfaces:

- loggingIn a deployment using ArgoCD, the ArgoCD Application for a WebAssembly workload with ingress might look as follows:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: demo-hello

namespace: argocd

spec:

project: default

source:

path: .

repoURL: oci://ghcr.io/cosmonic-labs/charts/http-trigger

targetRevision: 0.1.2

helm:

values: |

components:

- name: http

image: ghcr.io/cosmonic-labs/control-demos/hello-world:0.1.2

ingress:

host: "hello.wasmworkloads.io"

destination:

name: in-cluster

namespace: hello

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- ServerSideApply=true

- CreateNamespace=true

- RespectIgnoreDifferences=true

retry:

limit: -1

backoff:

duration: 30s

factor: 2

maxDuration: 5mThis ArgoCD Application uses the HTTPTrigger Helm chart as a source and supplies the required values for a simple "Hello World" application—in this case, the name and image for one component and the host for the ingress.

Once deployed, this ArgoCD Application will create an HTTPTrigger that looks as below:

apiVersion: control.cosmonic.io/v1alpha1

kind: HTTPTrigger

metadata:

name: example

namespace: default

spec:

replicas: 1

ingress:

host: 'hello.localhost.cosmonic.sh'

paths:

- path: /

pathType: Prefix

template:

spec:

components:

- name: http

image: ghcr.io/cosmonic-labs/control-demos/hello-world:0.1.2Using the paths field, we could bind multiple components to the same domain on different paths.

The HTTPTrigger creates and manages a WorkloadDeployment pre-populated with the interfaces for an HTTP incoming handler. The WorkloadDeployment, in turn, manages Workload and WorkloadReplicaSet resources.

Further reading

- You can find other examples of CRD manifests in our Template Catalog.

- See Custom Resources for more information on the relationships between Cosmonic Control CRDs.

Many existing wasmCloud applications include OAM-formatted YAML manifests designed for vanilla wasmCloud deployments. These OAM manifests are not Kubernetes-native and are not to be confused with the CRD manifests used by Cosmonic Control. (OAM manifests can be identified by the API version core.oam.dev/v1beta1.)

A wash plugin for easily converting OAM manifests to CRDs will be released soon.